Valence project

By Ward Dehairs, September 9, 2021

A while ago I worked temporarily as a consultant for a project at Imec, in collaboration with University of Gent. The project -among other things- was about exploring human interactions with technology, in what ways workers can be aided by robots and algorithms and how the effectiveness of a given technology can be quantified.

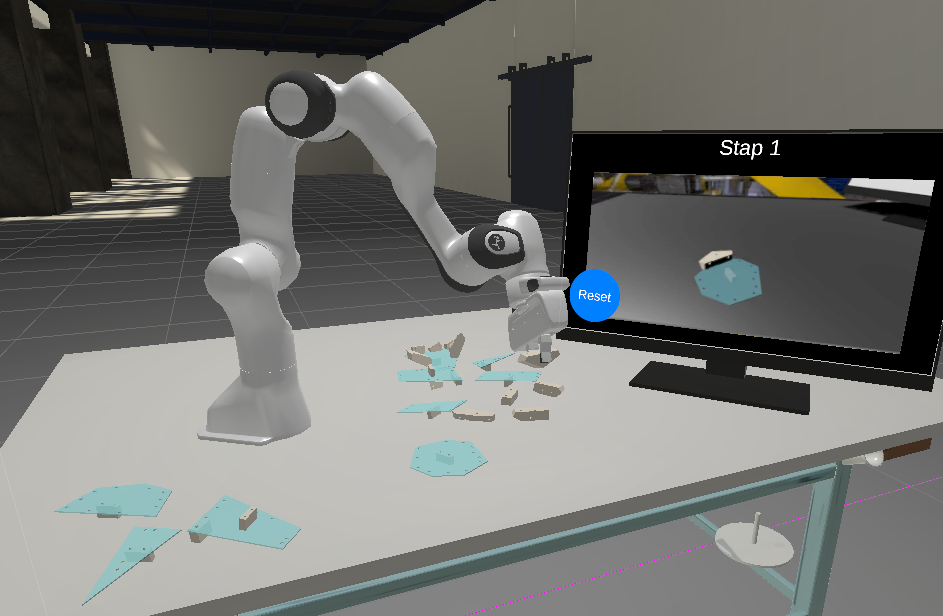

The project I was called to help on was a VR affair complete with motion control gloves where the user is tasked with a simple assembly task, like a lego construction with a manual. Central to this is the co-bot, which assists the human by handing the right pieces, and show the correct location and orientation.

My job was to program the behaviour of the robot, as well as the user interactions with it and the assembly task itself.

I hadn't worked on a VR project before, it was quite an experience getting a free home VR set to test and play around with. I decided to download Beat Saber while I was at it, might as well get the most out of the setup while I had it.

Some detailed information about developing the Valence app:

For the co-bot I settled on writing an IK algorithm that would automatically move the joints to get the head to a given target location. At that point I could easily program complex movement behaviour by animating this target point, with some minor extras such as closing the clamps exactly using raycasts. The IK algorithm was the hard part, I went for a customized gradient-descent algorithm that simply tries to nudge each piece of the arm a little bit, slowly looking for the rotations that bring the target point closer to its target. I've been looking to generalize this algorithm so I can turn it into a new Unity asset, but this has proven to be quite challenging.

The assembly task itself consists of snapping pieces in the right place and the right orientation. There is only one correct orientation, but of course the player should be able to get it wrong as well. This leads to a vast number of possible connections, which needs a clever approach to stay manageable. I created a system of "nodes" which mark points on a given piece where it can connect to the node of another piece. The node connections will allow the next piece to snap onto an existing assembly once it is aligned up to a given margin. The node-connection allows the piece 1 degree of freedom, rotating about the connecting axis. So far nothing too outlandish, just a bit of middle-range programming required. The big problem though is actually arranging the nodes onto the pieces on the first place. There are hundreds of connection points troughout the assembly model, so putting a GameObject on each, fine-tuning its position, rotation and orientation would have been quite tedious. I decided to write an algorithm that would automatically detect these holes and put the nodes on top of them. The algorithms is basically just a clustering algorithm that looks for large concentrations of vertices in the model. The developer only has to tweak the search parameters to get a very good first guess at the node layout. It still requires a little bit of manual clean-up afterwards, but it is still bound to save many development hours.

Setting up VR reliably was another big issue. Each VR setup is slightly different, and re-calibrating is very tedious. Especially in the context of the experiments for which the app is intended. This led me to writing my own calibration sequence, which would automatically look for connected hardware, set offsets, positions and rotations to set the user neatly in front of their virtual desk. This algorithm had the annoying tendency of breaking whenever one of the hardware pieces needed an update, but it was essential to actually doing the experiment.

Finally the bread and butter of science, collecting data! Most of the data was just run-of-the mill Unity logging, with some attention for timing, starting and stopping runs (co-bot helping, VR helmet position, hand positions, assembly piece positions). A bit more unusual was the eye-tracking and a seperate heart-rate monitor. This together with user-feedback should be enough to provide all the information to measure cognitive strain.